The Fuzzy Factor: Striving to Protect Patient Privacy in Big Data Sets

A major objective of former Vice President Joe Biden’s National Cancer Moonshot Initiative is breaking down silos and sharing cancer patients’ data across institutions. This path is critical to bringing new dimensions to understanding the molecular biology of cancer, leading to the rapid identification of new therapeutic targets and accelerated development of molecularly informed cancer therapeutics.

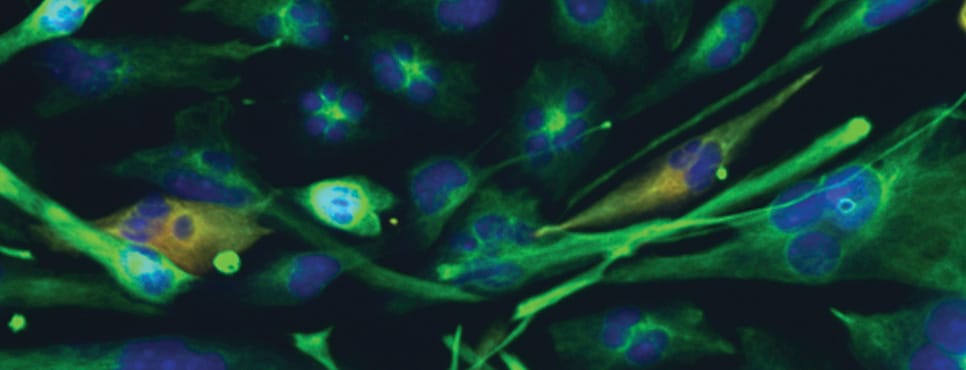

There could not be a better time to do this, given the major leaps the oncology field is making with technological breakthroughs and the resultant generation of vast amounts of genomic data. As researchers make tremendous progress with major data-sharing efforts, such as the first public release of nearly 19,000 de-identified genomic records from AACR Project GENIE, an area to monitor is protection of patient data and privacy.

HIPAA regulations to protect patient privacy

Access to patient data is governed by laws and regulations, such as the Health Insurance Portability and Accountability Act (HIPAA), passed in 1996. Patient de-identification provisions and privacy rules of HIPAA have since been updated to ensure protection of data, especially after Latanya Sweeney, PhD, then a graduate student at Massachusetts Institute of Technology, famously re-identified then-governor of Massachusetts, William Weld. Sweeney re-identified Weld by combining information from data released to researchers by the Massachusetts group insurance commission and voter registration list.

In a paper recently published in The New England Journal of Medicine, the authors identified ethical considerations as one of the barriers to successful data-sharing efforts. The authors, who are members of the Clinical Cancer Genome Task Team of the Global Alliance for Genomics and Health, have developed a Framework for Responsible Sharing of Genomic and Health-Related Data, which provides research consortia with “robust policies, tools, and adapted consent procedures that respect patient autonomy while supporting international data-sharing practices.”

Cyberattacks to large health databases, such as the recent “WannaCry” ransomware attack that targeted the National Health Services of the United Kingdom, could potentially lead to breaches of protected patients’ health information and personally identifiable information, note authors of another paper in The New England Journal of Medicine. The paper describes in detail how such information could be used in a variety of nefarious ways by hackers.

How easy is it to re-identify a patient?

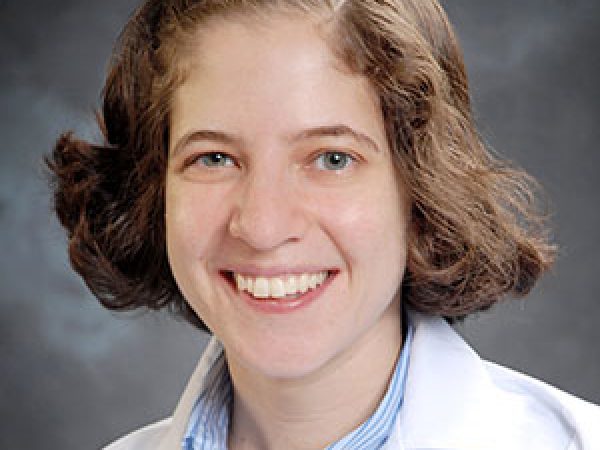

In a study published in Cancer Epidemiology, Biomarkers & Prevention, a journal of the American Association for Cancer Research, a team of researchers from Norway tested the strength of current methods of patient de-identification in preventing re-identification of individuals from large data sets.

“Researchers typically get access to de-identified data, that is, data without any personal identifying information, such as names, addresses, and Social Security numbers. However, this may not be sufficient to protect the privacy of individuals participating in a research study,” said senior author of the study, Giske Ursin, MD, PhD, director of the Cancer Registry of Norway, Institute of Population-based Research, in an interview.

“People who have the permission to access such data sets have to abide by the laws and ethical guidelines, but there is always this concern that the data might fall into the wrong hands and be misused,” Ursin added. “As a data custodian, that’s my worst nightmare.”

K-anonymization and adding a “fuzzy factor” to scramble patient data

Ursin and colleagues used data containing 5,693,582 records from 911,510 women in the Norwegian Cervical Cancer Screening Program to test the strength of a de-identification method called k-anonymization, and another method in which a “fuzzy factor” is added. The data set included patients’ dates of birth, and cervical screening dates, results, names of the labs that ran the tests, subsequent cancer diagnoses, if any, and dates of death, if deceased.

The team assessed the re-identification risk in three different ways: First they used the original data to create a realistic data set (D1). Next, they k-anonymized the data by changing all the dates in the records to the 15th of the month (D2). Collapsing the data by this method would result in having “k” number of people with the same dates in a data set.

Third, they fuzzed the data by adding a random factor between -4 to +4 months (except zero) to each month in the data set (D3). By adding a fuzzy factor to each patient’s records, the months of birth, screening, and other events are changed; however, the intervals between the procedures and the sequence of the procedures are retained, which ensures that the data set is still usable for research purposes.

The researchers found that just by changing the dates using the standard procedure of k-anonymization, they could drastically lower the chances of re-identifying most individuals in the data set.

In D1, the average risk of a prosecutor identifying a person was 97.1 percent. More than 94 percent of the patient records were unique, and therefore those patients ran the risk of being re-identified. In D2, the average risk of a prosecutor identifying a person dropped to 9.7 percent; however, 6 percent of the records were still unique and ran the risk of being re-identified.

By adding a fuzzy factor, in D3, the average risk of a prosecutor identifying a person was 9.8 percent, and 6 percent of the records ran the risk of being re-identified. This meant that there were as many unique records in D3 as in D2. However, unlike k-anonymization, scrambling the months of all records in a data set by adding a fuzzy factor would make it more difficult for a prosecutor to link a record from this data set to the records in other data sets and re-identify an individual, Ursin explained.

“Every time a research group requests permission to access a data set, data custodians should ask the question, ‘What information do they really need and what are the details that are not required to answer their research question,’ and make every effort to collapse and fuzzy the data to ensure protection of patients’ privacy,” Ursin said.